Introduction:

Artificial Intelligence (AI) has revolutionized various industries, enabling faster and more accurate decision-making processes. As AI becomes increasingly integrated into our lives, its application in decision-making raises crucial ethical questions. While AI offers numerous benefits, it also poses potential risks, biases, and concerns about human autonomy. In this blog, we will explore the ethical considerations surrounding the use of AI in decision-making processes and the steps to ensure responsible AI implementation.

The Role of Bias in AI Decision-Making:

One of the most significant ethical challenges with AI lies in the potential bias that can emerge from the data on which it is trained. AI systems learn from historical data, which may contain inherent biases based on societal prejudices and historical discrimination. When these biases are not addressed, AI algorithms can perpetuate and exacerbate existing inequalities. We will delve into examples and strategies to mitigate bias in AI decision-making.

Transparency and Explainability:

Another ethical aspect of AI decision-making is the “black box” problem, where AI systems can be highly complex and difficult to understand. Lack of transparency and explainability can lead to a loss of trust in AI decisions. Users have the right to know how decisions are made, especially in critical domains like healthcare, finance, and law. We will discuss the importance of interpretability and ways to make AI more transparent.

Accountability and Responsibility:

As AI systems become more autonomous, the question of accountability arises. Who should be responsible when AI makes an incorrect or biased decision? Should the developers, users, or the AI itself be held accountable? Understanding and assigning responsibility in AI decision-making is vital to ensure that errors and negative consequences are addressed appropriately.

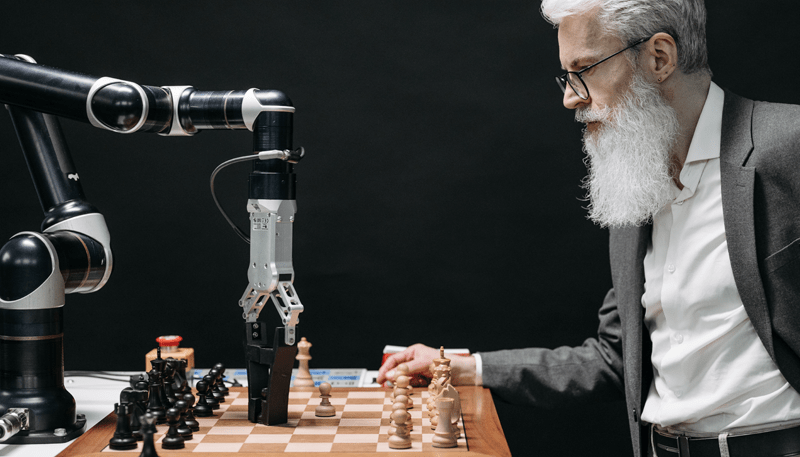

The Impact on Human Autonomy:

The use of AI in decision-making processes raises concerns about human autonomy. When humans rely heavily on AI-generated suggestions, their ability to make independent and informed choices might be compromised. Striking a balance between AI assistance and preserving human agency is crucial to maintain our capacity for critical thinking and decision-making.

Ethical Frameworks for AI Decision-Making:

Various ethical frameworks have been proposed to guide the development and deployment of AI in a responsible manner. We will explore prominent frameworks like fairness, transparency, accountability, and privacy. Understanding these principles can help developers and organizations navigate the ethical challenges associated with AI decision-making.

Ensuring Data Privacy and Security:

AI relies on vast amounts of data to function effectively. However, ensuring data privacy and security is essential to protect individuals from potential misuse of their personal information. We will discuss the importance of data protection regulations and best practices to safeguard sensitive data in AI decision-making processes.

Building Public Trust in AI:

For AI to be widely accepted and beneficial, public trust is paramount. We will explore the factors that influence public trust in AI and the steps that governments, businesses, and developers can take to foster transparency, openness, and accountability in AI applications.

**Conclusion:**

The use of AI in decision-making processes has the potential to enhance efficiency and effectiveness across various domains. However, we must address the ethical considerations associated with AI to avoid reinforcing biases, compromising human autonomy, and eroding public trust. By implementing transparent and accountable AI systems, adhering to ethical frameworks, and prioritizing data privacy, we can harness the power of AI responsibly and ensure a more equitable and just future.